The Evolution of AI in Business

- Nan Braun

- Jul 23, 2025

- 2 min read

The Die is Cast

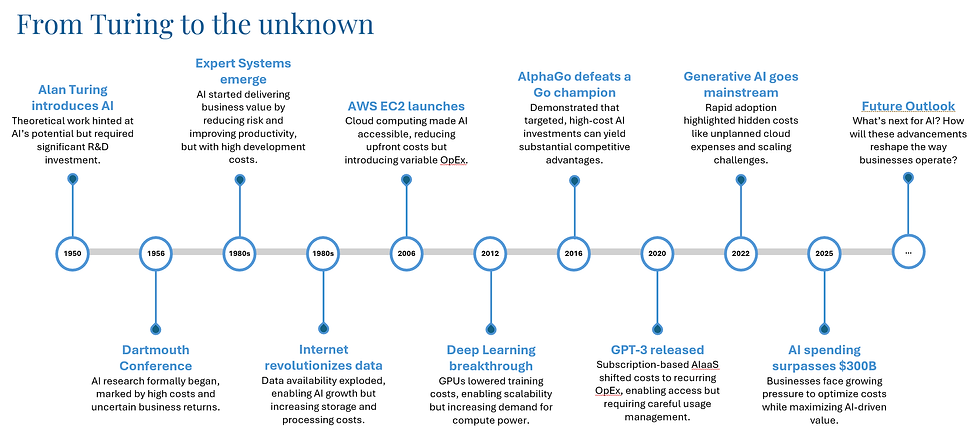

The roots of AI can be traced back to the 1950s when the concept was introduced by Alan Turing. In 1956, the term "Artificial Intelligence" was first coined at the Dartmouth Conference, where researchers envisioned machines performing tasks traditionally requiring human intelligence. Early applications focused on rule-based systems which have laid the foundation for today’s machine learning and neural networks.

In the 1980s, AI was known as “Expert Systems” and was primarily used to automate human actions on the plant floor or support complex decision-making in discrete fields such as credit risk assessments and oil and gas exploration. The key limitations at the time were computing power and the availability and richness of data to inform decision making.

Advancements in computing hardware and the explosion of internet-driven data in the 1990s and 2000s has fueled AI research and adoption, particularly within very large organizations. AI is now seamlessly integrated into our daily lives, from mobile devices to online shopping and media consumption. Think of how often we interact with AI-powered recommendation engines like those from Amazon or Netflix. Enterprise organizations are also leveraging AI-driven algorithms to great success, driving both groundbreaking advancements and potential pitfalls in commercial adoption.

The Ever-Changing Nature of AI

Today, AI is often discussed using buzzwords like LLMs (Large Language Models) or GenAI (Generative AI). At its core, AI consists of computer code designed to process inputs – such as images, videos, audio, or text – analyze them and generate specific outputs. In Analytic AI implementations, this may involve highly specialized tasks, such as vision systems detecting cancer cells in medical images. In Generative AI, the use case is broader, such as chatbot interactions. The software itself undergoes training over time, learning from vast datasets. This training may be conducted internally for organizations developing proprietary new AI or may have already been completed for commercial solutions from companies like Microsoft, Google, Amazon, NVidia, or OpenAI.

All AI software must run on infrastructure, either in the cloud or on-premises within an organization’s data centers. The choice between these options has significant cost and operational implications. Cloud-based AI offers scalability and flexibility but can result in unpredictable, variable expenses. On-premises AI provides greater cost control but requires upfront hardware investments and ongoing maintenance.

Comments